As Open RAN deployments become faster and more precisely synchronized, synchronization has become a critical step toward consistent performance. This is a complex task, with devices requiring time synchronization with UTC reference via IEEE PTP/SyncE based limit clocks, slave clocks, PRTC clocks with GNSS receivers, as well as precise monitoring and in real time to support customer SLAs. As thousands of Open RAN devices are deployed, monitoring the network for associated outages and taking appropriate action will require advancements in AI/ML. The first lessons learned from the field reveal a way forward.

Accurate and reliable timing has long been necessary to maintain critical telecommunications network capabilities, such as cellular operations, cell coverage and efficiency, precise handoff between cell towers, and more. Telecommunications service providers can implement various methods to meet strict phase and time synchronization requirements. The objective of each method is to ensure synchronization of all nodes with the Master Reference Clock Source (PRTC) using the Global Positioning System (GPS)/Global Navigation Satellite System (GNSS) . GNSS refers to a constellation of satellites providing signals from space that transmit positioning and timing data to GNSS receivers for time reference purposes.

However, the location of the timing source may vary depending on network topology, cost, and application. Typically, telecommunications network nodes are synchronized using Precision Timing Protocol (PTP) and Synchronous Ethernet (SyncE) technologies used in IEEE 1588 PTP Grandmaster (PRTC source), limit clocks, transparent clocks and slave clocks within the network.

Open RAN is an industry-wide initiative to develop open interfaces, divide the RAN into individual network elements, and enable interoperability between hardware vendors. Open RAN standardization aims to give operators the flexibility to mix and match radio network components to achieve the best performance. This flexibility is not without complexity, further increasing the criticality of synchronizing the network synchronization function between components. Specifically, since timing applications are distributed across the front-haul (FH) and mid-haul (MH) network, including distributed unit (DU) and radio unit (RU), it is important to synchronize applications requiring synchronization on the UD. and RU must maintain very good sector/cell key performance indicators (KPIs).

In an existing radio access network (RAN), all end applications requiring synchronization reside in a single eNodeB. In 3GPP split architectures (Open RAN), disaggregated applications require distributed synchronization across the network, including DU, RU and centralized unit (CU). This synchronization distribution increases the burden of managing synchronization networks for the operator.

Eyes on KPIs

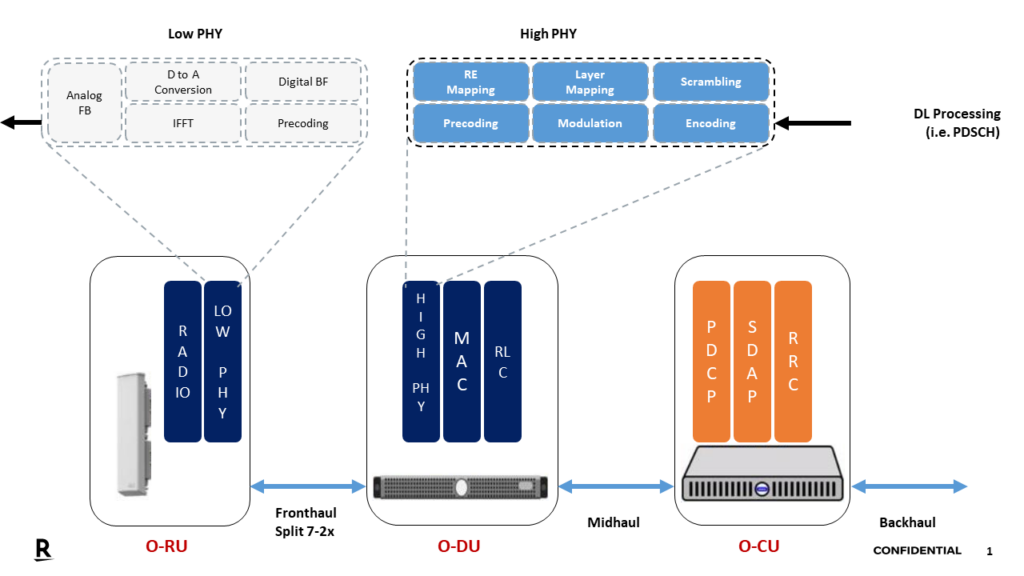

With the evolution of the IEEE PTP, the dependence of the radio network on GNSS systems has significantly decreased. GNSS is however still required as PRTC as defined in the ITU-T G.8272 and ITU-T G.8272.1 specifications, depending on the deployment type. This is true for Open RAN DU, which is a logical node hosting RLC/MAC/high-PHY layers based on lower layer functional division, and RU, which is a logical node hosting a Low-PHY layer and RF processing based on a functional division of the lower layer (Figure 1). IEEE 1588 grand master clocks (PRTC clock source) are placed in the central data center within the network to provide clocking to DUs and RUs in front-haul or mid-haul networks.

Figure 1. In a split O-RAN 7.2 architecture, the Low-PHY functions reside in the radio units while the High-PHY functions reside in the distribution unit. Image https://www.techplayon.com/o-ran-fronthaul-spilt-option-7-2x/

Every PRTC clock device with an integrated GNSS receiver or intermediate boundary clock source that provides time to the downstream network is susceptible to potential timing outages.

Depending on where the GNSS source is installed or placed, the impact on the entire network varies. For example, an O-RAN Alliance compliant network with the DU acting as the PTP grandmaster providing clocking to the RUs in a front-end network will see any GPS failure on the DU in the RAN LLS-C1 configuration impacted on the DU and the connected RUs.

On the other hand, the supply of GPS from the EF, which has an integrated GPS receiver and the EF acting as a PRTC synchronization source for the applications, would only have an impact in the event of a failure on this EF. This also has implications for PTP/SyncE KPIs distributed across the network from the PRTC source.

All sync and sync configuration and provisioning for a cloud-based deployment takes place over a network. Outages are increasing and networks must be closely monitored to take corrective action. This requires a central, accurate, real-time monitoring mechanism on the cloud network to retrieve timing KPIs, create insights to estimate outages based on key metrics, and take reactive and corrective actions to avoid having impact cell KPIs and increase cell availability.

Root Causes of Timing-Based Outages

As Open RAN networks proliferate, operators must understand the primary causes of timing outages, all of which require real-time tracking to detect. In our work in Japan and other countries, we have identified:

- GNSS signal jamming, intentional and unintentional

- GNSS signal spoofing, including across multiple constellations and frequency bands

- Signal blockages and multipath errors caused by tree canopies in rural areas or large glass structures in urban areas

- Ionospheric effects and geographic issues

- Hardware faults connecting GNSS receivers, such as surge arrester issues due to lighting or cable faults.

- Bad weather conditions

- Leap second warnings, including the need to notify all applications of upcoming leap second additions/removals

- PTP packet drops, clock quality degradations, and clock advertisements

- SyncE clock quality degradations

There are also security issues to consider. Modern networks provide vital infrastructure for business, mission, and society-critical applications that are of national interest. Between July 2020 and June 2021, the telecommunications sector was the most targeted sector when it came to GNSS security threats, with 40% of attacks compared to 10% for the next vertical (Source: EUROCONTROL EVAIR).

Detect and analyze sync and synchronization failures

Based on our experience, network operators can take the following steps to initiate mitigations for timing and synchronization failures, including those related to GNSS security threats:

- Develop a mechanism for the O-Cloud interface to detect and analyze GNSS signal jamming, or interference or spoofing conditions before conditions deteriorate.

- Analyze poor weather conditions that may interfere with GNSS signals based on the history of GNSS outages detected on a DU. This data can be used to predict and anticipate the impact these conditions could have on neighboring and co-located DUs, allowing an operator to initiate corrective actions more quickly.

- Likewise, analyze GNSS error conditions and predict how these conditions could potentially impact neighboring and co-located DUs to initiate corrective actions.

- Detect and analyze Packet Timing Signal Failure (PTSF) conditions and initiate mitigation actions. Predict PTSF conditions and take corrective action, such as switching to another clock source, before waiting for failures to actually occur and impact the network.

This is not an exhaustive list. These mechanisms should be reported through the O-Cloud interface and make necessary contributions to the WG6 O-Cloud API specification for the synchronization plane (S-plane), as existing datasets are not sufficient.

AI and ML for timing and synchronization

A multi-layered approach to integrating artificial intelligence (AI) and machine learning (ML) into timing and synchronization should be considered for accurate, efficient and optimized management of timing and synchronization events.

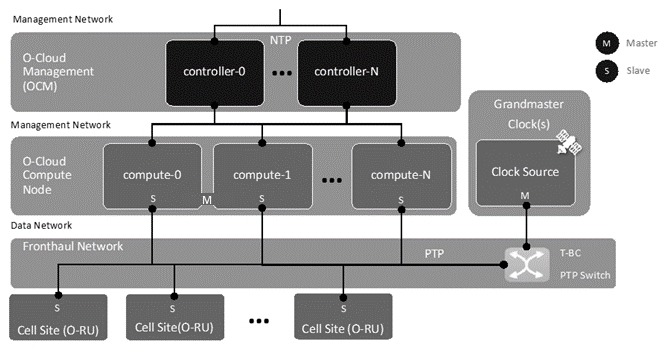

For example, a solution to timing outages can be divided into multiple layers and leverage AI/ML mechanisms to perform local learning per DU/RU, a set of DU/RUs belonging to that CU, or a group of O-CU (see Figure 2). It needs methods to define the interactions between these multiple layers in real-time and non-real-time based on the type of event, alarm or error. In practice, an operator:

- Define detection and mitigation algorithms for local synchronization failures within DUs/RUs.

- Analyze information exposure rApps/xApps to develop smart mitigation actions and policies at the non-RT-RIC (SMO) or near RT-RIC level.

- Create automation and AI/ML-based algorithms for timing failure detection and analysis in 5G core or evolved packet core (EPC).

Figure 2. This high-level architecture can leverage AI and ML for timing and synchronization in Open RAN networks.

Conclusion

Open RAN brings operators the promise of increased revenue streams, reduced capital expenditure and total cost of ownership, and diversity benefits. Reaping these benefits will depend in part on leveraging AI/ML technologies in timing and synchronization solutions to bring more efficiency and optimization to the management of timing networks in Open RAN and help operators to improve KPIs and customer experience.