Originally published in ForbesSeptember 30, 2024

This is the third in a series of three articles covering the business value of predictive AI, using misinformation detection as an example: item 1, article 2, article 3.

This is an established practice, but it is still not standardized. Predictive AI has been improving business operations for decades, but there is no widely adopted process to assess its potential value and deploy it accordingly.

This is the standard to follow. The model predictive evaluation process should look like this:

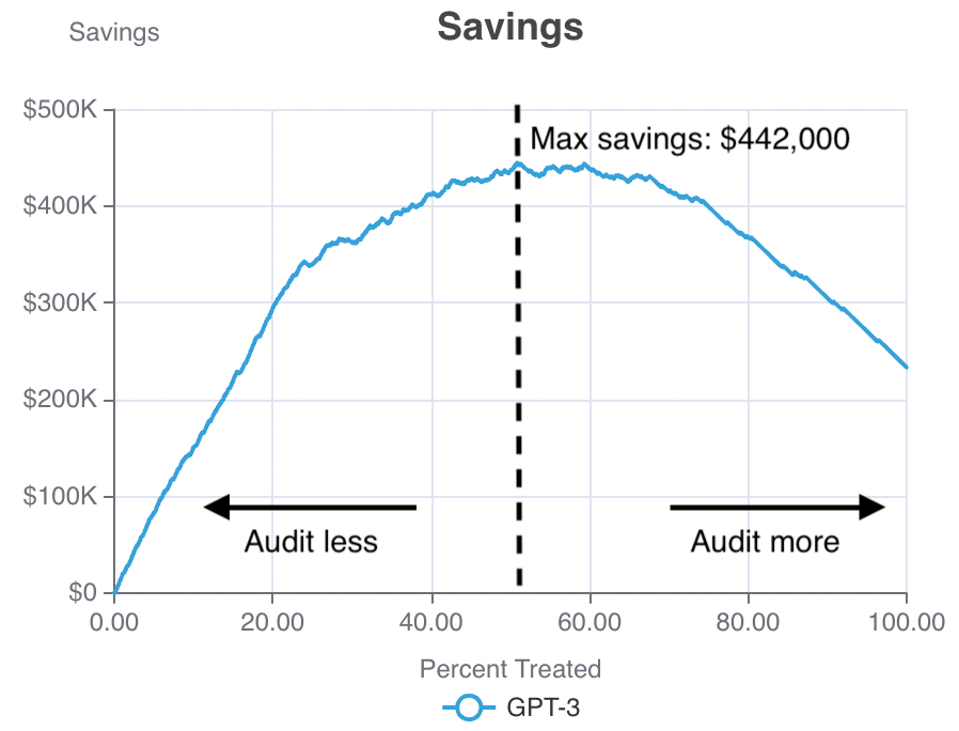

A savings curve for misinformation detection. The horizontal axis represents the share of positions audited manually and the vertical axis represents savings. – ERIC SIEGEL

As I detailed in the First of all two In the articles in this series, this savings curve above represents action versus value: The horizontal axis represents the number of social media posts manually reviewed as potential misinformation (as targeted by a predictive model) and the vertical axis represents dollars saved.

This type of view is not standard. Unfortunately, predictive AI projects rarely assess potential value in terms of simple business metrics like profits and savings. This omission contributes to the high rate with which these projects fail to launch.

Moving from model evaluation to model evaluation

Valuing a business should involve details about the business. The traditional way of doing things: evaluating predictive models only in terms of technical measuressuch as precision, recall, and accuracy, are abstract and do not involve any details about how the business is intended to use the model. In contrast, evaluating models in terms of business metrics relies on the integration of business factors.

Here are the business factors behind the savings chart example shown above:

- The number of cases, set at 200,000.

- The cost of manually auditing a publication, set at $4.

- The cost of undetected disinformation, set at $10.

While the first two can be established objectively – based on the number of applicable positions per day and labor cost – the third is subjective, so there may be no definitive way to determine its framework.

Subjective costs: misdiagnosis or missed diagnosis

It is essential to establish the cost of each error. In doing so, we can bridge a precarious gap, moving from purely predictive performance to business KPIs. Analytics consultant Tom Khabaza has long been telling us how important this is, ever since we called ML “data mining.” Her Law of Value of Data Miningstates: “There is no technical measure of value (of a model)…The only value is commercial value. »

But sometimes it seems almost impossible to pin down costs. Get a medical diagnosis. If you mistakenly tell a healthy patient that they just had a heart attack, that’s bad. You can imagine the unnecessary stress, as well as the unnecessary treatments that may be administered. But if by mistake you fail to detect a real heart attack, it’s worse. You are allowing a serious illness to go untreated. How much worse is a missed diagnosis than a positive misdiagnosis? A hundred times worse? Ten thousand times? Someone needs to put a number on this and I’m glad it’s not me.

For many business ML applications, it’s much simpler. The costs of misclassification are often obvious, based on business realities such as the cost of marketing, the cost of fraud, or the opportunity cost for each missed customer who would have responded if contacted.

But not always. Even spam detection can go wrong and cost you something immeasurable, like a missed job or even a missed date with someone you ended up marrying. A false positive means you might miss an important message, and a false negative means you have to manually filter spam from your inbox. There is no consensus on the best way to determine the relative costs of these two items, but whoever is behind your spam filter has made this decision, if not intentionally and explicitly, then at least by allowing the system to default to something arbitrary.

Sometimes decision makers need to quantify the unquantifiable. They must commit to specific costs for misclassifications, despite subjectivity and ethical dilemmas. Costs determine the development, evaluation and use of the model.

“Be sure to assign costs to FPs and FNs that are better oriented rather than just passively assuming the two costs are equal,” industry leader Dean Abbott told me, “even if you do not have a truly objective basis for doing so.”

What is the cost of disinformation?

Imagine a lawyer bursting into the room and saying, “We got slammed in the press this weekend because of the misinformation on our platform – it’s literally costing us!” »

A nervous executive might respond: “Let’s increase the assumed cost of undetected misinformation from $10 to $30. »

The savings curve for false information detection with the cost of false negatives increased to $30. – ERIC SIEGEL

As you can see, the shape of the curve has changed, moving the maximum savings point to 74%. The curve suggests the company is inspecting more posts, which makes sense, given the newly increased value of identifying and blocking misinformation.

But this is still subject to change. The $30 figure came purely from executive instinct. It’s not set in stone. In light of this inconsistency, your only recourse is to be wise about how much of a difference other potential changes will make. As you and your colleagues deliberate about cost definitions and test different values, watch the shape of this curve change. Intuitive understanding relies on visualizing the story the curves tell and the extent to which that story changes across possible cost parameters.

Here are your three takeaways from this story:

1) We must value predictive models in terms of business metrics.

2) We need to look at profit and savings curves to manage deployment tradeoffs.

3) We need to observe how the curve evolves as we adjust for business factors – those that are subject to change, uncertainty or subjectivity.

About the author

Eric Siegel is a leading consultant and former professor at Columbia University who helps companies deploy machine learning. He is the founder of the long-running group Machine Learning Week lecture series, the instructor of the famous online course “Machine Learning Leadership and Practice – End-to-End Mastery», editor-in-chief of Time for machine learning and a frequent keynote speaker. He wrote the best-seller Predictive Analytics: The power to predict who will click, buy, lie or diewhich has been used in courses at hundreds of universities, as well as The AI Handbook: Mastering the Rare Art of Deploying Machine Learning. Eric’s interdisciplinary work bridges the stubborn gap between technology and business. At Columbia, he won the Distinguished Faculty Award for teaching senior computer science courses in ML and AI. He later served as a business school professor at UVA Darden. Eric also publishes opinion pieces on analysis and social justice. You can follow it LinkedIn.