On October 16, 2024, the New York State Department of Financial Services (NY DFS) issued important advice on steps organizations can take to detect and mitigate cybersecurity risks posed by artificial intelligence (AI). While these guidelines are intended for organizations licensed and regulated by NY DFS in the banking, financial services, and/or insurance industries, they provide a useful framework for organizations in all industry sectors that continue to face an increased risk of cyber threats, compounded by the advancement and proliferation of evolving AI technology.

Cyber risks linked to AI

As noted by the NY DFS, AI has increased organizations’ cybersecurity risks in several ways. First, threat actors use AI tools to conduct sophisticated and realistic social engineering scams using email (phishing), telephone (vishing), and text messages (smishing). Second, cybercriminals can exploit AI tools that accelerate the speed, scale, number and severity of cyberattacks against organizations’ computer networks and information systems. Additionally, the relative accessibility and ease of use of AI has lowered the barriers to entry for less sophisticated cybercriminals who want to attack organizations, disrupt their operations, and steal sensitive data.

NY DFS also warns that “(supply) chain vulnerabilities represent another critical area of concern for organizations using AI.” AI tools require the collection, screening and analysis of vast amounts of data. As such, any third-party vendors or service providers involved in this process may pose a particular threat that could expose an organization’s non-public information.

Mitigating AI Threats

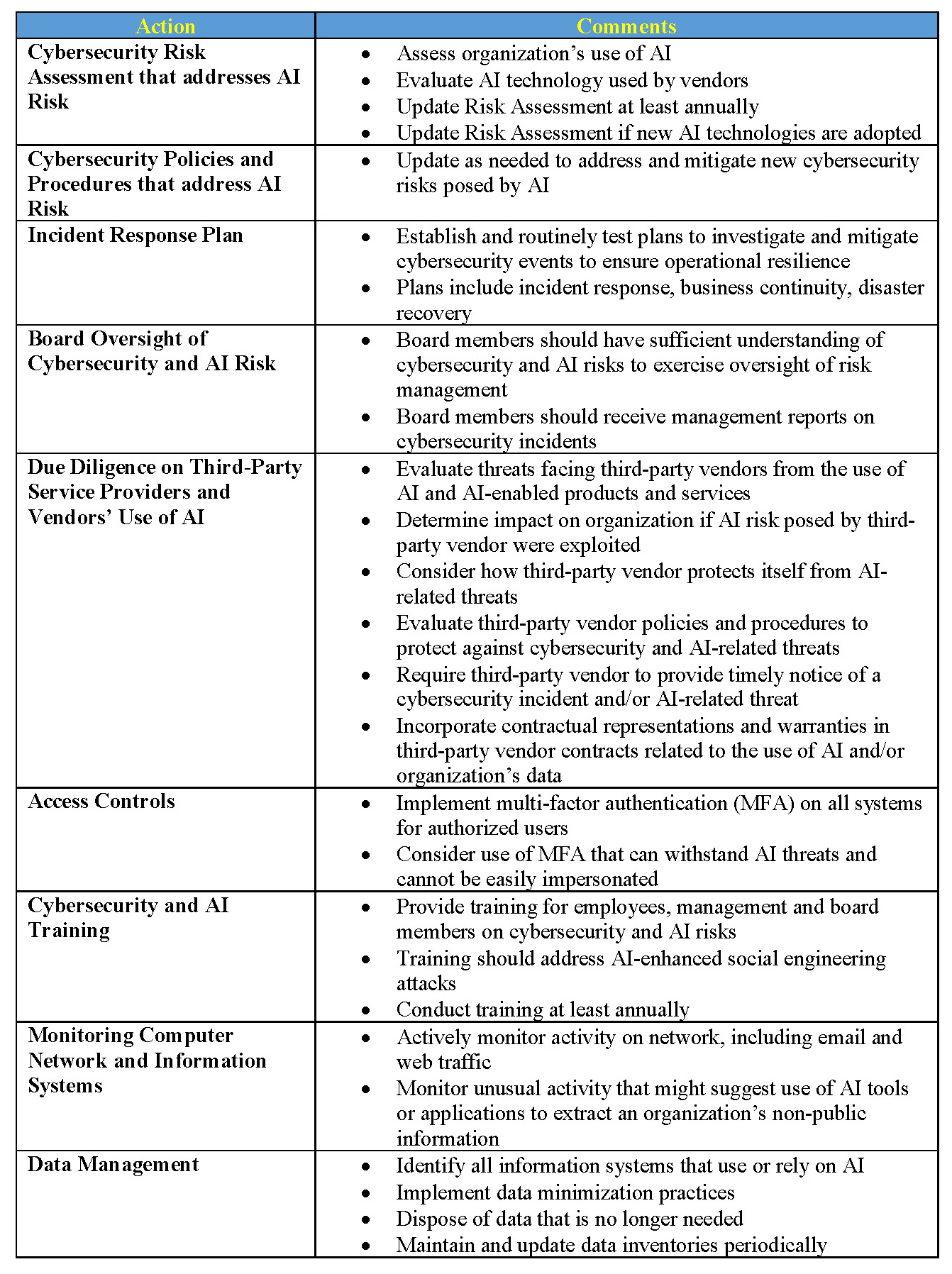

The NY DFS Cybersecurity Regulation (23 NYCRR Part 500) requires approved entities to conduct a cybersecurity risk assessment and implement minimum cybersecurity standards designed to mitigate cyber threats, including those posed by AI. The recent NY DFS guidance contains helpful advice for all organizations that want to take proactive steps to defend against AI-related cyberthreats. Some practical advice and guidance is summarized in the table below.

Conclusion

The increasing proliferation and adoption of the use of AI tools and applications poses both a potential benefit and risk to organizations across all industry sectors. As the law continues to evolve regarding the regulation of AI technology, organizations are well advised to take stock of their AI risks now by adopting and implementing assessments, cybersecurity policies and procedures and AI risks, employee training and contractual arrangements at their supplier. contracts that address AI-related cyberthreats and privacy concerns.