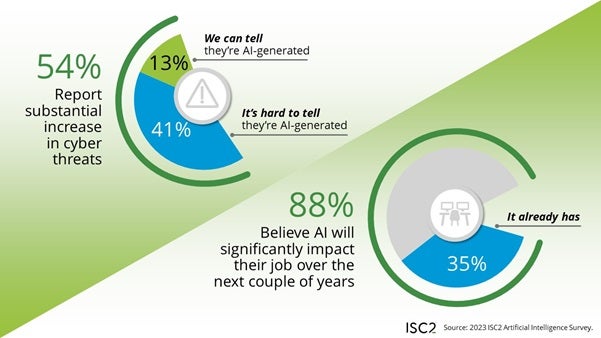

Most cybersecurity professionals (88%) believe AI will have a significant impact on their work, study finds. new survey from the International Information System Security Certification Consortium; with only 35% of respondents having already witnessed the effects of AI on their work (Figure A). The impact is not necessarily a positive or negative impact, but rather an indicator that cybersecurity professionals expect a change in their work. Additionally, concerns have been raised about deepfakes, misinformation and social engineering attacks. The survey also looked at policy, access and regulation.

How AI Could Affect the Jobs of Cybersecurity Professionals

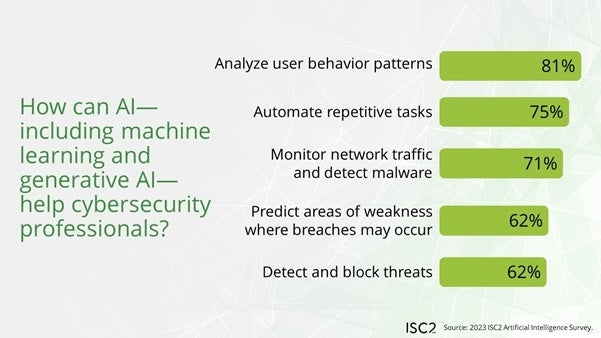

Respondents generally believe AI will make cybersecurity tasks more efficient (82%) and free up time for higher-value tasks while taking care of other tasks (56%). AI and machine learning could notably take over these aspects of cybersecurity professions (Figure B):

- Analyze user behavior patterns (81%).

- Automation of repetitive tasks (75%).

- Network traffic monitoring and malware detection (71%).

- Predict where breaches might occur (62%).

- Threat detection and blocking (62%).

The survey does not necessarily classify the response “AI will make parts of my job obsolete” as negative; instead, it is presented as an improvement in efficiency.

Top AI Cybersecurity Concerns and Possible Effects

When it comes to cybersecurity attacks, professionals surveyed were most concerned about:

- Deepfakes (76%).

- Disinformation campaigns (70%).

- Social engineering (64%).

- The current absence of regulation (59%).

- Ethical concerns (57%).

- Breach of privacy (55%).

- The risk of data poisoning, intentional or accidental (52%).

The surveyed community disagreed on whether AI would be better for cyber attackers or defenders. When asked about the statement “AI and ML benefit cybersecurity professionals more than criminals,” 28% agreed, 37% disagreed, and 32% were unsure.

Among the professionals surveyed, 13% say they are convinced that they can definitively associate the increase in cyber threats over the last six months with AI; 41% said they could not make a definitive link between AI and increased threats. (These two statistics are subsets of the group of 54% who said they had seen a substantial increase in cyberthreats over the past six months.)

SEE: UK National Cyber Security Center warns Generative AI could increase the volume and impact of cyberattacks over the next two years – although it’s a little more complicated than that. (TechRepublic)

Malicious actors could leverage generative AI to launch attacks at speeds and volumes impossible even with a large human team. However, it is still not clear how generative AI has affected the threat landscape.

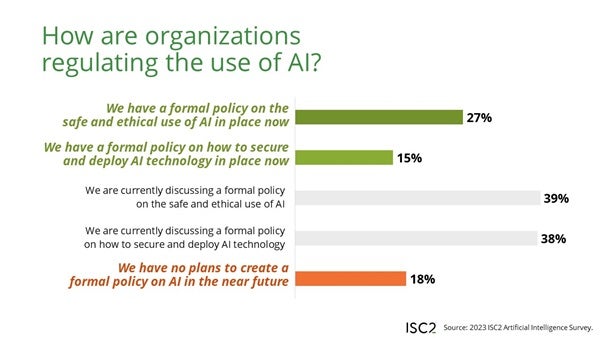

In flux: Implementation of AI policies and access to AI tools in companies

Only 27% of ISC2 survey respondents said their organization had formal policies in place for the safe and ethical use of AI; Another 15% said their organization has formal policies on how to secure and deploy AI technology (Figure C). Most organizations are still working on developing a policy for the use of AI, in one form or another:

- 39% of companies surveyed are working on AI ethics policies.

- 38% of companies surveyed are working on safe and secure AI deployment policies.

The survey revealed a wide variety of approaches to giving employees access to AI tools, including:

- My organization has blocked access to all generative AI tools (12%).

- My organization has blocked access to some generative AI tools (32%).

- My organization allows access to all generative AI tools (29%).

- My organization has not had internal discussions about allowing or prohibiting generative AI tools (17%).

- I don’t know my organization’s approach to generative AI tools (10%).

AI adoption is still evolving and will surely change much more as the market grows, falls, or stabilizes, and cybersecurity professionals can be at the forefront of raising awareness of AI issues generative in the workplace because it affects both the threats they respond to. and the tools they use for their work. A slim majority of cybersecurity professionals (60%) surveyed say they are confident they can lead the deployment of AI in their organization.

“Cybersecurity professionals are anticipating both the opportunities and challenges that AI presents and are concerned that their organizations lack the expertise and awareness to safely introduce AI into their operations,” said Clar Rosso, CEO of ISC2, in a statement. Press release. “This creates a tremendous opportunity for cybersecurity professionals to lead, applying their expertise in secure technology and ensuring its safe and ethical use. »

How Generative AI Should Be Regulated

How generative AI is regulated will largely depend on the interaction between government regulation and large technology organizations. Four in five respondents said they “clearly see a need for comprehensive and specific regulations” on generative AI. How this regulation can be implemented is a complex issue: 72% of respondents agree with the statement that different types of AI will require different regulations.

- 63% said AI regulation should come from government collaborative efforts (guaranteeing standardization across borders).

- 54% said AI regulation should come from national governments.

- 61% (surveyed in a separate question) would like to see AI experts come together to support the regulatory effort.

- 28% are in favor of private sector self-regulation.

- 3% want to maintain the current unregulated environment.

The ISC2 methodology

The survey was distributed to an international group of 1,123 cybersecurity professionals who are ISC2 members between November and December 2023.

The definition of “AI” can sometimes be uncertain today. Although the report uses the general terms “AI” and machine learning, the topic is described as “large, public-facing language models” as ChatGPT, Google Gemini or Meta’s Llama, generally known as Generative AI.