Introduction – AI brings new ethical challenges and the need for professional registration

With the rapid development and increasing demand for the use of artificial intelligence (AI), the ethical use of these systems poses new and serious challenges.

The problems are currently most visible in emerging generative AI platforms (used to create content such as text and images). They include misinformation, intellectual property and plagiarism, bias in training data and the ability to influence and manipulate public opinion (e.g. in elections).

The Post Office Horizon IT scandal has highlighted the vital importance of independent standards of professionalism and ethics in the application, development and deployment of technology.

It is true that the UK aims to play a leading role in the development of safe, trustworthy, responsible and sustainable AI, as demonstrated by the November 2023 summit and the Bletchley Declaration there. is associated (1).

We believe that these objectives can only be achieved when:

- Practitioners of AI and other high-stakes technologies meet common standards of accountability, ethics and competence, as licensed professionals.

- Non-technical CEOs, leadership teams, and boards of directors who make decisions about resourcing and developing AI in their organizations share this responsibility and have a better understanding of technical and ethical issues.

This document makes the following recommendations to support the ethical and safe use of AI:

- Every technologist working in a high-stakes IT role, particularly in AI, must be a licensed professional meeting independent standards of ethical practice, accountability and competence.

- Government, industry and professional bodies should jointly support and develop these standards to build public confidence and raise expectations of good practice.

- UK organizations should publish policies on the ethical use of AI in all systems relevant to customers and employees – and these should also apply to leaders who are not technical specialists, including CEO.

- Technology professionals should expect robust and sustained whistleblowing pathways when they feel they are being asked to act unethically or, for example, deploy AI. in a way that harms their colleagues, their customers or society.

- The UK government should aim to take the lead and help UK organizations set world-leading ethical standards.

- Professional bodies, such as the BCS, should support this work by regularly seeking and publishing research on the challenges their members face, and advocating for the support and advice they need and expect.

A PDF version of this document is available for download.

The BCS 2023 ethics survey – Main conclusions

The BCS Ethics Specialist Group conducted a survey of IT professionals in the summer of 2023, to help identify challenges practitioners face (2), ahead of the AI Safety Summit. This paper presents key findings and suggests some actions that should be implemented to help address the challenges. The online survey was sent to all UK BCS members in August 2023. 1,304 people responded. The results show that AI ethics is a topic that BCS members consider a priority, one that many have encountered personally, and that is problematic in many ways. There is a lack of consistency in how businesses deal with ethical issues related to technology, with many organizations providing no support to their staff. A summary of the results highlights that:

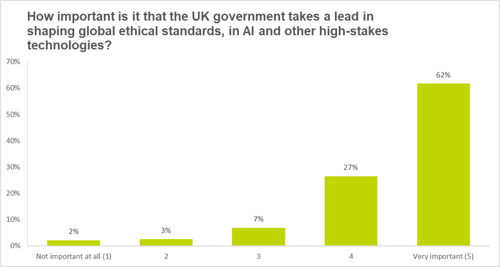

- 88% of respondents believe it is important that the UK government takes the lead in developing global ethical standards, in the area of AI and other high-stakes technologies.

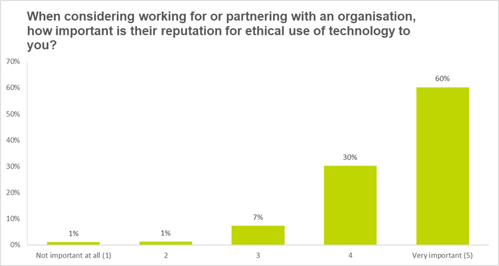

- When considering working or partnering with an organization, 90% say their reputation for ethical use of AI and other emerging technologies is important.

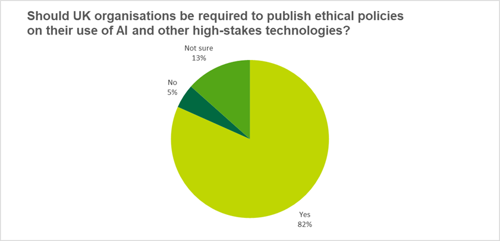

- 82% think UK organizations should be required to publish ethics policies on their use of AI and other high-stakes technologies.

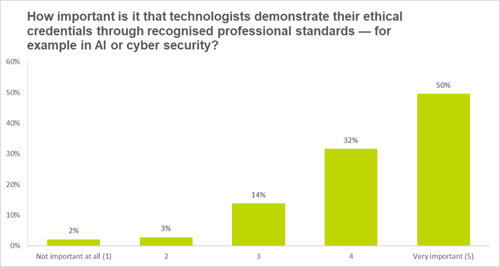

- When it comes to technologists demonstrating their ethical credentials through recognized professional standards, 81% of respondents believe this is important.

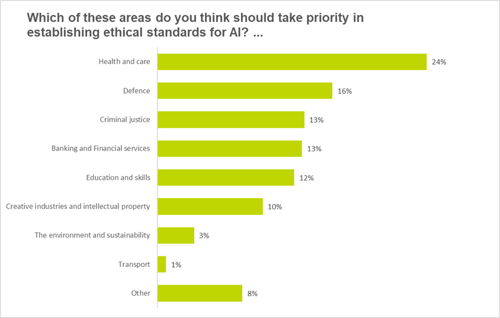

- Most respondents (24%) indicated that health and care should be prioritized in establishing ethical standards for AI.

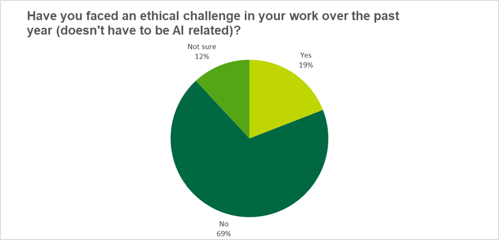

- 19% of respondents have faced an ethical challenge in their work in the past year.

- Employer support on these issues varies, with many respondents reporting having received little or no support in dealing with ethical issues related to technology, although there are examples of good practice.

Results analysis :

Priority areas for establishing ethical standards in AI

Nearly a quarter see health and care as the key area, which is unsurprising given the obvious possible ramifications of AI in surgery, diagnosis or patient interaction. However, other areas such as defense, criminal justice, but also banking and finance can lead to adverse consequences from the use of AI and require special attention, according to those interviewed.

Ethical Issues in Professional Computing Practice

When asked if technologists had faced any ethical challenges in their work over the past year, 69% of respondents said no. This suggests that each year approximately a third of BCS members face ethical challenges, implying that facing ethical challenges is virtually inevitable throughout a professional career, thus confirming the long-standing emphasis on the inclusion of ethics in BCS accredited training. Interestingly, 12% of respondents are unsure whether they have faced ethical challenges, suggesting either a lack of conceptual clarity around ethics or a rapidly evolving concern that does not allow easy categorization of ethical issues.

Organizational reputation for ethical use of AI and other high-stakes technologies

Recognition of the importance of ethics and awareness of the potential harm caused by technology explains why respondents strongly preferred working with organizations with a strong reputation for ethical use of technology. 90% of BCS members who responded to the survey rated this as either very important or important. This raises challenges for organizations in terms of appropriate mechanisms that enable them to demonstrate and report their commitment to ethical work, one of which is the publication of ethics policies.

Requirement to publish policies on the ethical use of AI and other high-stakes technologies

The high level to which respondents value the ethical reputation of the organizations they work with was reflected in the strong support for requiring organizations to publish their policies on the ethical use of technologies, including AI.

More than 80% of those surveyed support this proposal and only 5% are categorically opposed to it. This position is important in that it not only supports the creation of ethical policies and their enforcement, but goes beyond that by calling for the obligation to publish them.

Importance of government leadership on AI and technology ethics

The previous question already shows that respondents believe that the government has a key role to play in ensuring the ethical use of critical technologies, such as AI. To explore the topic of government, in terms of policies and standards, we asked the question “How important is it that the UK government takes the lead in setting global ethical standards, in the field of AI and other high-stakes technologies?

88% of respondents believe it is important that the UK government takes the lead in developing global ethical standards, in the area of AI and other emerging technologies. It also shows the need for parties preparing manifestos ahead of the 2024 general elections to clarify their positions on the ethical use of technology. This also applies to devolved administrations and relevant policies falling within their remit. Initiatives like the Center for Data Ethics and Innovation (CDEI) are a good way to develop these policies; the mandate of the CDEI Ethics Advisory Council ended in September 2023; the center will continue to seek “expert opinions and guidance in an agile way that allows us to respond to the opportunities and challenges of the ever-changing AI landscape” (3). In November, the British government announced the creation of the AI Safety Institute (4).

Demonstrating ethical credentials is “very important”

Respondents clearly understood that they face responsibilities in their role as IT professionals. This is evident from their strong support for acquiring ethical qualifications, for example through ethical standards in areas such as AI or cybersecurity. Only 5% of respondents considered them unimportant, and 81% considered them important or very important.

Supporting IT professionals on ethical issues related to AI and other high-stakes technologies

In our last question, we asked “How did your organization help you raise and manage the (ethics) issue?” “.

Of those who responded, 41% said they received no support and 35% received “informal support” (e.g. talking to their manager/colleagues).

The survey showed examples of good practice, as for example expressed by this response:

“My employer listened to my possible ethical concerns. I was helped to discuss the potential problem with our customers. Our clients and my employer agreed to put controls in place to ensure the potential ethical situation was handled appropriately.

This shows that some organizations follow good practices, engage in ethical discussions and develop clear policies and procedures for employees.