Generative AI is making its presence felt in more and more fields, but there are well-founded concerns about the accuracy of the insights it provides.

Is it possible to offer the convenience of a large language model AI system, with the logic and precision of advanced analytics? Arina Curtis, CEO and co-founder of DataGPT, thinks. We spoke to him to find out more.

BN: Why are existing large language models poorly suited to data analysis applications?

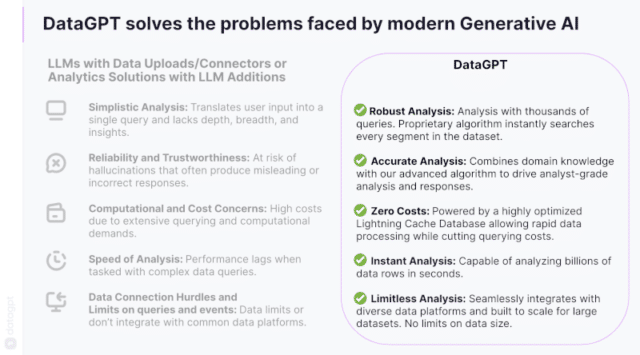

AC: Large language models (LLMs) are limited in their ability to perform data analysis and struggle to solve computationally complex questions. Although they can generate human-like text and answer general knowledge questions quite well, they cannot interpret data in a meaningful way without the addition of a dedicated data interface. Additionally, LLMs can generate ambiguous or inaccurate information, posing a major challenge in terms of the precision and accuracy needed for effective and actionable data analysis.

BN: What are the main challenges in using LLMs effectively?

AC: Popular solutions fall into two main categories: LLMs with a simple data interface (e.g. LLM + Databricks) or BI solutions integrating generative AI. The first category handles limited data volumes and source integrations. They also lack in-depth analysis and understanding of the business context of the data. The second category leverages generative AI to modestly accelerate traditional BI workflow to create the same type of narrow reports and dashboards.

BN: How is DataGPT different?

AC: DataGPT offers a completely new data experience, allowing all users to access comprehensive analysis by simply asking questions in everyday language.

The LLM is the right brain of the system. It’s really good at contextual understanding. But you also need the left brain, the DataAPI, an algorithm to perform critical logical functions and draw conclusions. Many platforms fail when it comes to combining the “left-brain” logic tasks of deep data analysis with the “right-brain” LLM interpretation functions.

DataGPT’s self-hosted LLM, combined with core analytics technology, makes sophisticated analytics accessible, enabling faster data-driven decision-making and freeing analysts from the burden of ad-hoc and follow-up requests.

BN: Can you tell us a little bit about the technologies behind this?

AC: DataGPT’s AI Analyst is based on three key pillars:

- Lightning Cache: Our proprietary database is 90x faster than traditional databases, enabling analytics 15x cheaper and queries 600x faster than standard business intelligence tools.

- Data analysis engine: our algorithm performs a comprehensive and logical analysis of customer data. Ask DataGPT a question and it will run millions of queries and calculations to determine the most relevant and impactful information. Our sophisticated analysis can answer complex questions, such as “why did this happen?” »

- Data Analysis Agent: intelligent enough to handle ambiguities in human speech and accurately interpret data elements of interest to the user, while handling holistic/contextual interpretation and completely avoiding inaccuracies (e.g. “hallucinations”) of generic base models. Uses natural language querying (NLQ) functionality to search and provide insights from enterprise data sources, and creates stories around these generated insights, used to make more informed business decisions.

BN: Is this just for data analysts or can anyone use it?

AC: DataGPT serves product, UX, marketing, ad sales, and customer service teams across a wide range of B2B and B2C industries, including fintech, e-commerce, gaming, media, entertainment, and travel .

While data teams make up the bulk of our users, our mission is to empower every person, in any role and in any industry, to use data to make decisions, big and small. small. Non-technical business users and department managers can ask questions using a familiar chat window in everyday language to pursue revenue and growth opportunities.

Image credit: BiancoBlue/Dreamstime.com