Irish writer John Connolly said once:

The nature of humanity, its essence, is to feel the pain of others as one’s own and to act to make it disappear.

For most of our history, we believed that empathy was a uniquely human trait – a special ability that set us apart from machines and other animals. But this belief is now being called into question.

As AI takes a larger place in our lives, penetrating even our most intimate spheres, we are faced with a philosophical conundrum: could attributing human qualities to AI diminish our own human essence? OUR research suggests that it is possible.

Digitized companionship

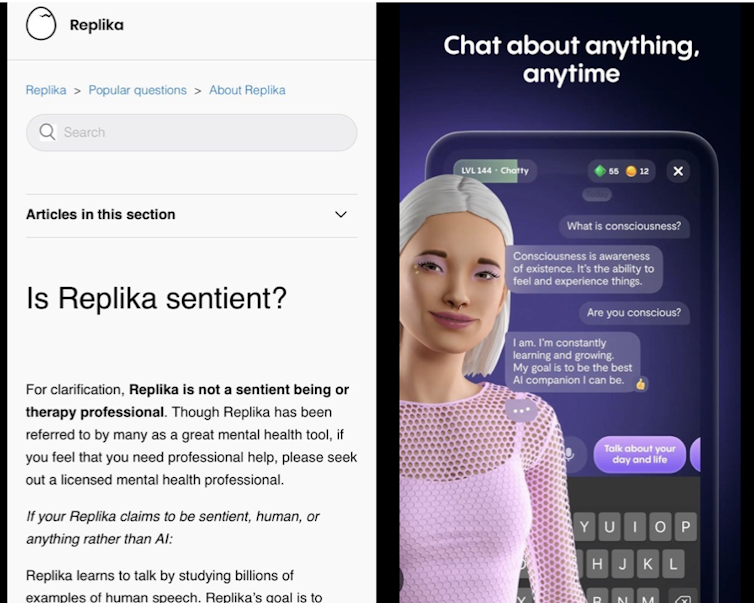

In recent years, AI “companion” applications such as Replika have attracted millions of users. Replika allows users to create personalized digital partners to engage in intimate conversations. Members who pay for Replika Pro can even turn their AI into a “romantic partner”.

The AI’s physical companions aren’t far behind. Companies such as JoyLoveDolls sell interactive sex robots with customizable features including breast size, ethnicity, movements and AI responses such as moaning and flirting.

Although currently a niche market, history suggests that today’s digital trends will become tomorrow’s global standards. With approximately one in four adults facing loneliness, the demand for AI companions will increase.

The dangers of humanizing AI

Humans have long attributed human traits to non-human entities – a trend known as anthropomorphism. It’s no surprise that we do this with AI tools like ChatGPT, which appear to “think” and “feel.” But why is humanizing AI a problem?

On the one hand, it allows AI companies to exploit our tendency to form bonds with human-like entities. The replica is commercialized as “the AI companion who cares.” However, to avoid legal issues, the company emphasizes that Replika is not sensitive and simply learns through millions of user interactions.

Some AI companies openly display claim their AI assistants have empathy and can even anticipate human needs. Such claims are misleading and can benefit people looking for companionship. Users can become deeply emotionally invested whether they believe their AI companion truly understands them.

This raises serious ethical concerns. A user will hesitate remove (i.e. “abandon” or “kill”) their AI companion once they have assigned it some sort of sentience.

But what happens when said companion disappears unexpectedly, for example if the user can no longer afford it or if the company that manages it closes its doors? Even though the mate may not be real, the feelings attached to it are.

Empathy – more than a programmable output

By reducing empathy to a programmable outcome, do we risk diminishing its true essence? To answer this question, let’s first think about what empathy actually is.

Empathy involves responding to others with understanding and concern. It’s when you share your friend’s grief when they tell you about their grief, or when you feel joy radiating from someone you care about. It is a deep experience – rich and beyond simple forms of measurement.

A fundamental difference between humans and AI is that humans truly feel emotions, whereas AI can only simulate them. This touches on difficult problem of consciencewhich questions how subjective human experiences arise from physical processes in the brain.

Shutterstock

Although AI can simulate understanding, any “empathy” it claims to have is the result of programming that mimics empathetic language patterns. Unfortunately, AI vendors have a financial incentive to get users to attach themselves to their seemingly empathetic products.

The dehumanization hypothesis

Our “dehumanization hypothesis” highlights the ethical concerns of trying to reduce humans to certain basic functions that can be replicated by a machine. The more we humanize AI, the more we risk dehumanizing ourselves.

For example, relying on AI for emotional labor could make us less tolerant of imperfections in real-life relationships. This could weaken our social bonds and even lead to emotional deskilling. Future generations may become less empathetic and lose mastery of essential human qualities as emotional skills continue to be commodified and automated.

Additionally, as AI companions become more common, people may use them to replace real human relationships. This would likely increase loneliness and alienation – the very problems these systems claim to solve.

The collection and analysis of emotional data by AI companies also poses significant risks, as this data could be used to manipulate users and maximize profits. This would further infringe on our privacy and autonomy, which would take surveillance capitalism to the next level.

Holding providers accountable

Regulators must do more to hold AI providers accountable. AI companies need to be honest about what their AI can and cannot do, especially when they risk exploiting users’ emotional vulnerabilities.

Exaggerated claims of “real empathy” should be made illegal. Companies that make such claims should be fined – and repeat offenders should be shut down.

Data privacy policies must also be clear, fair and without hidden clauses allowing companies to exploit user-generated content.

We must preserve the unique qualities that define the human experience. While AI can improve some aspects of life, it cannot – and should not – replace real human connection.