In the ever-evolving field of software engineering, generative artificial intelligence is rapidly gaining ground, offering an unprecedented blend of creativity and automation.

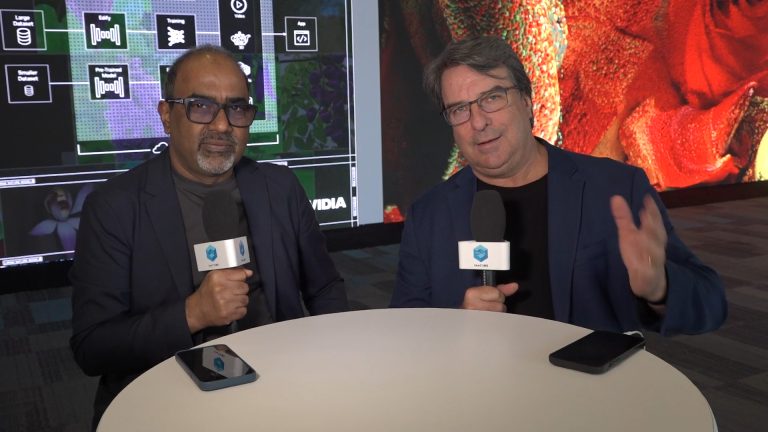

The shift to a generative era in software and computing highlights the challenges and potential advancements in AI programming, with both businesses and consumers focusing on AI, according to John Furrier (photo, right), executive analyst at theCUBE. Companies that move from being a technology provider to a service provider often get lost in this transition, as they end up competing with other service providers and muddying the waters.

“When you provide the technology, you give it to each service provider so they can build things and pass it on to the builders of these applications, right? It’s the technology provider,” said Sarbjeet Johal (left), technology analyst and go-to-market strategist. “You work with many service providers; you enable people to do things. So if you’re trying to be a service provider, you’re now competing with other service providers and that muddies the waters.

Furrier spoke with Johal at Nvidia GTC Event, in an exclusive broadcast on theCUBE, SiliconANGLE Media’s live streaming studio. They discussed the shift to a generative era in software and computing, with a focus on AI programming, digital twin technology, and the future of AI and custom silicon in infrastructure.

The Impact of Generative AI on Software Engineering and Industry Transformation

Nvidia invests in digital twin technology to revolutionize industries, and next year’s GTC will focus on more practical use cases, Johal explained. Nvidia’s stack is essential for AI generation, and the need for specialized chips to reduce power consumption and standards to reduce the cost of AI is crucial. Accelerated computing and a more distributed computing approach will lead to more success in AI use cases. The standard for clustered systems has been set, with a complete rebuild of what a system is, including reduced power and cooling requirements.

“I think one of the things that’s going to be different…you’re going to see a lot more competition. I think Nvidia has raised the bar… in terms of approaching generative AI systems,” Furrier said. “What I remember most from this year are two things. First… I think this idea of a monolithic system that doesn’t look like a mainframe but rather like distributed computing will allow for a number of use cases for AI to be accelerated more quickly. The second thing that jumps out at me is that I think this sets the standard for what we call clustered systems.

The need for a large GPU for simple tasks is being questioned, and switching computing needs between CPU and GPU will be a major priority in the coming years, according to Johal. The future of AI and custom silicon will revolutionize the infrastructure game with open standards and purpose-built designs for all workloads.

“If you separate the computer from the physical elements, it has to be software defined. If not, it’s more likely software built into that hardware. It’s kind of an old stack paradigm,” Johal said. “The benefit of having software-defined physical assets is that we can get the most out of them. Since most of this equipment will need electricity to operate and maintain, we want to turn it off when we don’t need it.

Here’s the full video interview, part of SiliconANGLE and theCUBE Research’s coverage on the Nvidia GTC Event:

Photo: SiliconANGLE

Your vote of support is important to us and helps us keep content FREE.

A click below supports our mission of providing free, in-depth and relevant content.

Join our community on YouTube

Join the community that includes more than 15,000 #CubeAlumni experts, including Andy Jassy, CEO of Amazon.com, Michael Dell, Founder and CEO of Dell Technologies, Pat Gelsinger, CEO of Intel, and many more luminaries and experts.

THANK YOU