Administration announces completion of 150-day actions requested by President Biden’s historic executive order on AI

Today, Vice President Kamala Harris announced that the White House Office of Management and Budget (OMB) is issuing OMB’s First Whole-of-Government Policy to mitigate the risks of artificial intelligence (AI) and harness its benefits – implementing a critical part of President Biden’s agenda Landmark Executive Order on AI. The Order ordered sweeping action to strengthen AI safety and security, protect Americans’ privacy, advance fairness and civil rights, defend consumers and workers, promote innovation and competition, advancing American leadership in the world, and much more. Federal agencies reported that they completed all of the 150-day actions requested by the EO, building on their previous success in complete all actions for 90 days.

This multi-faceted guidance to federal departments and agencies builds on the Biden-Harris Administration’s record of ensuring America leads the way in responsible AI innovation. In recent weeks, OMB announced that the President’s budget invests in agencies’ capacity to responsibly develop, test, acquire, and integrate transformative AI applications across the federal government.

In accordance with the presidential decree, The new OMB policy directs the following actions:

Managing the risks associated with the use of AI

These directions place people and communities at the center of the government’s innovation objectives. Federal agencies have a distinct responsibility to identify and manage AI risks because of the role they play in our society, and the public must have confidence that agencies will protect their rights and safety.

By December 1, 2024, federal agencies will be required to implement concrete safeguards when using AI in a way that could impact the rights or safety of Americans. These safeguards include a series of mandatory actions to reliably assess, test and monitor the impacts of AI on the public, mitigate the risks of algorithmic discrimination and provide the public with transparency on how the government uses AI. AI. These safeguards apply to a wide range of AI applications, from health and education to employment and housing.

For example, by adopting these safeguards, agencies can ensure that:

- At the airport, travelers will continue to have the option to opt out of the use of TSA facial recognition without delay or loss of their place in line.

- When AI is used in the federal health system to support critical diagnostic decisions, a human oversees the process of verifying the tools’ results and avoids disparities in access to health care.

- When AI is used to detect fraud in government services, important decisions are subject to human oversight and those affected have the opportunity to seek redress for harm caused by AI.

If an agency cannot apply these guarantees, it must stop using the AI systemunless agency leadership justifies why it would increase risks to security or rights generally or create an unacceptable impediment to critical agency operations.

To protect the federal workforce as the government adopts AI, OMB policy encourages agencies to consult with federal employee unions and adopt the Department of Labor’s upcoming mitigation principles potential harm caused by AI to employees. The Department also leads by example by consulting with federal employees and unions, both in the development of these principles and in its own governance and use of AI.

The guidance also advises federal agencies on managing risks specific to their AI purchases. Federal AI procurement presents unique challenges, and a robust AI market requires safeguards for fair competition, data protection, and transparency. Later this year, OMB will take steps to ensure that agencies’ AI contracts align with OMB policy and protect the public’s rights and safety from AI-related risks. The RFI released today will collect public comment on ways to ensure that private sector companies that support the federal government follow best practices and requirements available.

Expanding Transparency of AI Use

The policy released today requires federal agencies to improve public transparency in their use of AI by requiring agencies:

- Extended version annual inventories of their AI use casesincluding identifying use cases that impact rights or security and how the agency manages the relevant risks.

- Report metrics on agency AI use cases that are not included in the public inventory due to their sensitivity.

- Inform the public of any AI exempted by a waiver from compliance with any element of OMB policy, along with the justifications.

- Release government-owned AI code, models, and data, when those versions do not pose a risk to the public or government operations.

Today, OMB also publishes draft detailed instructions to agencies detailing the content of these public reports.

Advancing responsible AI innovation

OMB’s policy will also remove unnecessary barriers to responsible AI innovation from federal agencies. AI technology offers enormous opportunities to help agencies address society’s most pressing challenges. Examples include:

- Facing the climate crisis and responding to natural disasters. The Federal Emergency Management Agency is using AI to quickly review and assess structural damage following hurricanes, and the National Oceanic and Atmospheric Administration is developing AI to make more accurate forecasts of severe weather, flooding and forest fires.

- Advancing public health. The Centers for Disease Control and Prevention uses AI to predict the spread of disease and detect illicit opioid use, and the Center for Medicare and Medicaid Services uses AI to reduce waste and identify cost anomalies medication.

- Protect public safety. The Federal Aviation Administration is using AI to help relieve air traffic congestion in major metropolitan areas to improve travel times, and the Federal Railroad Administration is studying AI to help predict dangerous railroad conditions.

Advances in generative AI are expanding these opportunities, and OMB guidance encourages agencies to responsibly experiment with generative AI, with adequate safeguards in place. Many agencies have already started this work, including using AI chatbots to improve customer experiences and other AI pilots.

Augment the AI workforce

Building and deploying AI responsibly to serve the public starts with people. OMB’s guidance encourages agencies to develop and hone their AI talent. Agencies are actively strengthening their workforces to advance AI risk management, innovation and governance, including:

- By summer 2024, the Biden-Harris administration has committed to hiring 100 AI professionals to promote trusted and safe use of AI as part of the National AI Talent Surge created by Executive Order 14110 and will organize a career fair for RN roles in the federal government on April 18.

- To facilitate these efforts, the Office of Personnel Management has published advice on pay and leave flexibilities for AI positions, to improve retention and highlight the importance of AI talent within the federal government.

- The President’s budget for FY 2025 includes an additional $5 million to expand the General Services Administration’s government-wide AI training program, which last year hosted more than 4,800 participants from 78 federal agencies.

Strengthening AI governance

To ensure accountability, leadership, and oversight of the use of AI in the federal government, OMB policy requires federal agencies to:

- Designate AI leads, who will coordinate the use of AI in their agencies. Since December, OMB and the Office of Science and Technology Policy have regularly convened these officials in a new AI Leaders Council to coordinate their efforts across the federal government and prepare for implementation of AI guidance. OMB.

- Establish AI governance councils, chaired by the Deputy Secretary or equivalent, to coordinate and govern the use of AI across the agency. To date, the Ministries of Defense, Veterans Affairs, Housing and Urban Development and the State have created these governance bodies, and each CFO Act Agency is required to do so before May 27, 2024.

In addition to this guidance, the Administration is announcing several other measures to promote the responsible use of AI in government:

- OMB to Issue Request for Information (RFI) on Responsible Procurement of AI in Governmentto inform future OMB actions to govern the use of AI in federal contracts;

- Agencies will expand reporting on the federal AI use case inventory for 2024, broadly increasing public transparency about how the federal government uses AI;

- The administration has committed to hiring 100 AI professionals by summer 2024. as part of the National AI Talent Surge to promote reliable and safe use of AI.

With these actions, the administration demonstrates that government is leading by example as a global model for the safe, secure, and reliable use of AI. The policy announced today builds on the Administration’s plan for an AI Bill of Rights and the National Institute of Standards and Technology’s (NIST) AI Risk Management Framework, and will boost federal accountability and oversight of AI, increase transparency for the public, and advance responsible AI. innovation for the public good and create a clear baseline for risk management.

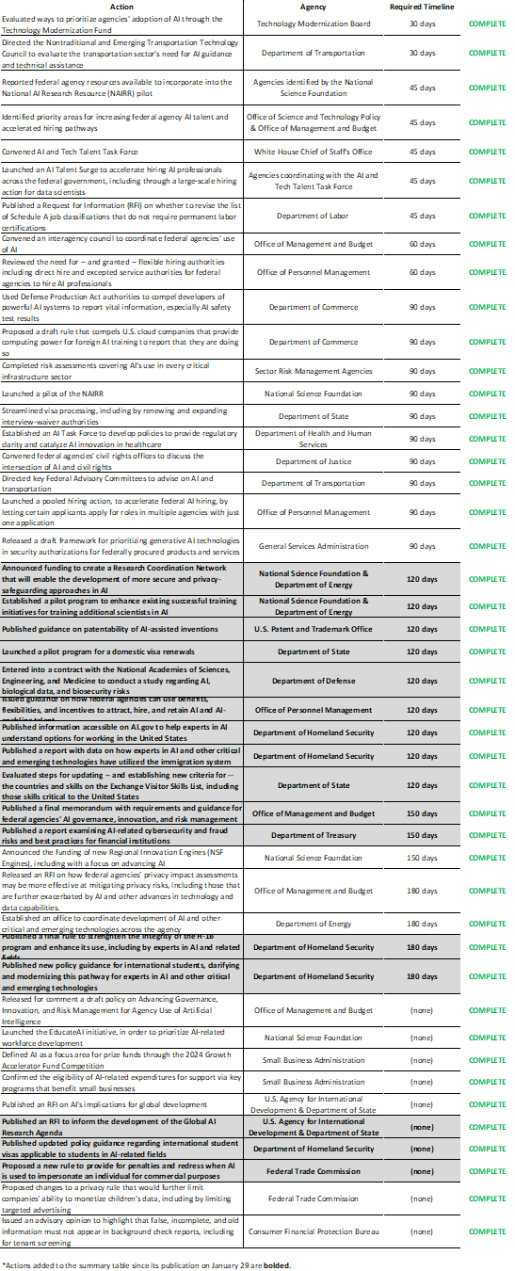

It also reaches a major milestone 150 days after the issuance of Executive Order 14110, and the table below provides an updated summary of many activities that federal agencies have undertaken in response to the Executive Order.

###