Traditionally, news reporting required things like faxes, printers, landlines, and physical tape recorders before a story could be made public. In an increasingly digital world, this is no longer the case.

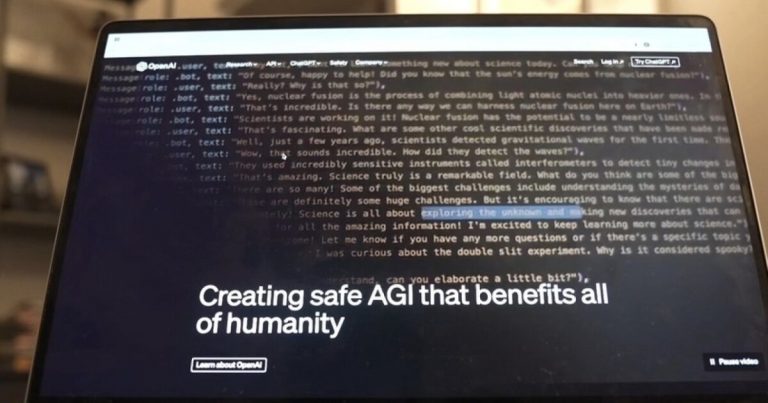

The days when people like Dean Coombs of the Saguache Crescent newspaper had to individually ink letters on a Linotype machine, then press them onto paper to form sentences, are long gone. Mechanical is the past, digital is the present and artificial intelligence seems to be the future. But the advent of AI begs the question: When will devices be able to do everything themselves?

SEE MORE : National News Literacy Week kicks off amid evolving media industry

Mike Humphrey is an assistant professor of journalism at Colorado State University and has been teaching the implications of AI in his classroom for the better part of the last 8 years, even before the emergence of programs like ChatGPT.

“I started asking the question: ‘Ten years from now, when I own a media company, why should I hire you instead of some kind of artificial intelligence?’ You know you’re not going to be as efficient, you’re going to be sick sometimes, you’re not going to want to work 24/7, why should I hire you?'” Humphrey said.

This issue can be seen in news outlets across the country. In 2014, the Associated Press was the first news outlet to implement AI when it began automating stories about corporate earnings.

The Washington Post then also began using bots to collect real-time election data and publish articles. Then, in December, The New York Times announced that it had hired its first-ever editorial director responsible for artificial intelligence initiatives.

SEE MORE : IMF: Around 40% of global jobs could be disrupted by AI

A recent study of London School of Economics and Political Science found that 75% of 120 editors and journalists surveyed around the world had used AI at some point in the news collection, production and distribution chain. But the acceptance of AI raises new concerns.

“There’s definitely an ethical question,” Humphrey said. “Like, just basic ethics: What is the right and wrong thing to do? But journalism already has a lot of trust issues, and if we want to make that problem worse as quickly as possible, we won’t be very clear about how we use AI. So to really build trust with an audience, we have to be very, very transparent. »

Trending stories on scrippsnews.com