What the GAO found

To promote transparency and inform the public about how artificial intelligence (AI) is used, federal agencies are required by Executive Order No. 13960 to maintain an inventory of AI use cases. The Department of Homeland Security (DHS) has established such an inventory, which is published on the Department’s website.

However, the DHS inventory of AI systems for cybersecurity is not accurate. Specifically, the inventory identified two use cases of AI in cybersecurity, but officials told us that one of those two cases was mislabeled as AI. Although DHS has a process for reviewing use cases before they are added to the AI inventory, the agency acknowledges that it does not confirm whether uses are properly qualified as AI. AI. Until it expands its process to include such determinations, DHS will not be able to ensure accurate reporting of use cases.

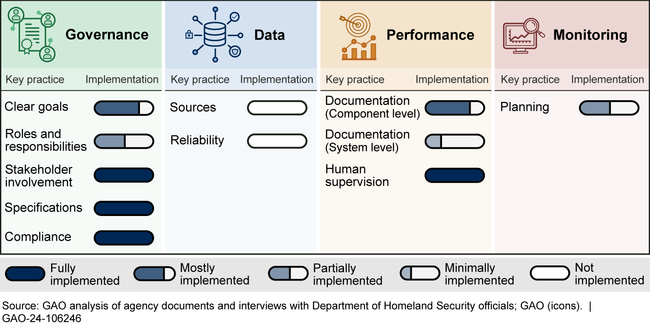

DHS has implemented some, but not all, of the key practices of GAO’s AI Accountability Framework to manage and oversee its use of AI for cybersecurity. GAO evaluated the latest cybersecurity use case known as automated detection of personally identifiable information (PII), against 11 AI practices selected in the framework (see figure).

Status of the Department of Homeland Security’s Implementation of Selected Key Practices to Manage and Oversee Artificial Intelligence for Cybersecurity

GAO found that DHS had fully implemented four of the 11 key practices and had implemented five others to varying degrees in the areas of governance, performance, and monitoring. It failed to implement two practices: documenting the sources and origins of data used to develop personal information detection capabilities and assessing the reliability of the data, officials said. The GAO AI Framework calls on management to provide reasonable assurance of the quality, reliability, and representativeness of the data used in the application, from its development through its operation and maintenance. Consideration of data sources and reliability is essential to model accuracy. Fully implementing key practices can help DHS ensure responsible and responsible use of AI.

Why GAO did this study

Executive Order No. 14110, issued in October 2023, notes that while responsible use of AI has the potential to help solve pressing challenges and make the world safer, irresponsible use could exacerbate societal harms and present risks to national security. Pursuant to the requirements of Executive Order No. 13960, issued in 2020, DHS has maintained an inventory of its AI use cases since 2022.

This report examines the extent to which DHS (1) verified the accuracy of its inventory of AI systems for cybersecurity and (2) incorporated certain practices from the GAO AI Accountability Framework to manage and oversee its using AI for cybersecurity.

GAO reviewed relevant laws, OMB guidance, and agency documents, and interviewed DHS officials. GAO applied 11 key practices from the framework to the DHS AI cybersecurity use case: Automated Personal Information Detection. DHS uses this tool to prevent unnecessary sharing of personal data. GAO selected the 11 key practices to reflect the framework’s four principles, align with the early stages of AI adoption, and be highly relevant to the specific use case.