Meanwhile, the theme of AI for good comes as a secondary topic, despite the enormous potential it represents for the world.

As artificial intelligence plays a greater role in human lives, it is time for businesses and non-commercial organizations to consider how they can balance innovation and ethical responsibility.

Why AI for good?

“Using AI for good” is a broad term that concerns the application of artificial intelligence to social, environmental and humanitarian challenges. This applies to all actions in which AI is used to benefit humanity as a whole and to protect our planet.

Some of the areas where AI is already having a positive impact include:

- advance medical research to discover new treatments

- improve access to education by creating virtual tutors and models for early detection of learning disabilities

- create strategies to reduction of carbon emissions

- using predictive analytics to better disaster preparedness and response.

All of these AI-based capabilities are revolutionary and were out of reach just a decade ago. However, I should also mention that developing AI for good resources comes with its share of risks.

Despite noble intentions, developers behind AI for good projects can run into pitfalls such as algorithmic bias, particularly if they operate on a small and homogeneous batch of training data. As they develop solutions to global challenges, they may also unintentionally violate local data protection laws. I discuss these and other issues in more detail.

Case Studies: Real-World Applications of AI for Good

Here are some examples of successful AI projects for good.

Merck

Merck, a leader in the life sciences industry, uses AIDDISON™, a AI R&D Assistant to reduce chemical identification time from 6 months to just 6 hours. The platform can generate potential drug molecules, simulate their interactions, and predict outcomes using machine learning and predictive models.

AIDDISON™ truly takes drug discovery to a whole new level. Not only does this minimize resource usage, it also significantly speeds up the discovery process, allowing scientists to focus on solutions with the greatest potential. It’s no exaggeration to say that this allows researchers to push scientific boundaries and improve health outcomes. This innovative approach accelerates drug discovery and improves access to healthcare through AI by paving the way for faster and more efficient treatment development.

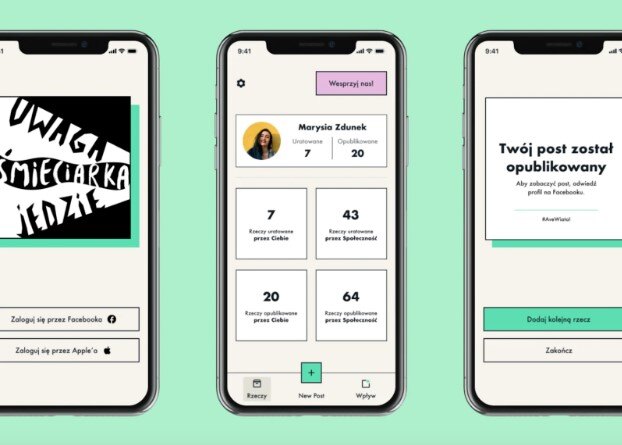

Uwaga, śmieciarka jedzie

Polish for “Quickly, a garbage truck is approaching,”Uwaga, śmierciarka jedzie» started as a Facebook group for those who wanted to give used and unwanted household items a chance at a new life.

What started as a grassroots initiative in 2013 has grown into an NGO with a community that prevents around 35,000 tonnes of used items from ending up in landfills each year.

The movement grew at such an extraordinary rate that by 2022 there were over 270 individual local groups, making community oversight “difficult” (to say the least). Tech to the Rescue helped the foundation develop a GenAI applicationwhich not only makes it easier to publish articles, but also grants users engagement points and allows them to organize fundraisers.

All these new capabilities allow the community to expand further without constraints and, therefore, increase its positive social and environmental impact.

What made these two initiatives successful? There are three main factors:

- Cross-functional collaboration – by bringing together specialists from diverse backgrounds, the teams were able to create versatile solutions that had a real impact.

- Ethical planning – ensuring the platform supports social impact goals and enhances accountability and transparency in its use of AI.

- User-centered design – maximize engagement by prioritizing ease of use and personal impact tracking.

Aligning AI implementation with ethical concerns

One of the biggest threats to AI for good projects is that it can backfire and contribute to the problem it was trying to solve.

THE Predictive Policing Project can be a powerful warning. It was originally designed as an AI model to predict and prevent criminal activity in the least safe areas of cities. Unfortunately, it was trained on biased data about a specific neighborhood, which only reinforced the problem of “over-policing” in that neighborhood instead of detecting crimes overall of the city.

If such problems go undetected, it can not only leave vulnerable groups unprotected, but even make their situation worse.

Another ethical concern with AI is that it often deals with highly sensitive data, which could harm people if their information fell into the wrong hands. Think of projects designed to address social disparities, misused by authoritarian regimes to surveil citizens, stripping them of privacy and autonomy. Unfortunately, the “ethics of AI” can vary by region, which is something we need to be aware of when developing social impact solutions.

Prepare a solid roadmap for responsible AI implementation

To use AI responsibly, it is essential to develop a detailed roadmap. It should include steps such as data collection, model development, testing, and deployment. All of this should have guidelines for ethical use of AI. For example, you can implement a strict data audit from the start to detect any bias in your training datasets.

The goals you set should be both ethical and supportive of business goals.

Let’s say you want to deploy AI for recruiting. Set goals that prioritize candidate equity by removing – or at least reducing – racial or gender bias, while improving recruiting efficiency.

We also cannot forget the milestones and benchmarks. These should include model performance audits to check accuracy and fairness, privacy compliance checks for data processing and alignment with legal standards such as GDPR. For example, if you were to develop a healthcare app, it could include a checkpoint to check patient data for bias and validate compliance with medical privacy laws.

Using AI Responsibly: A Checklist for Tech Companies

Here is a list you can use for your AI for good project:

- Only collect data with people’s permission, respecting confidentiality rules.

- Remove or hide any personal information in the data to protect privacy.

- Regularly review how data is used to ensure it meets ethical standards.

- Check for any unfairness in the data and adjust it to be more balanced.

- Use methods to reduce bias so that the AI is fairer in its decisions.

- Keep an eye on the results over time to ensure they don’t develop new biases.

- Engage diverse perspectives

- Invite feedback from different groups, including users, experts, and people affected by AI.

- Get feedback to understand and address any ethical concerns.

- Document concerns raised during this feedback process and respond thoughtfully.

- Transparency and Communication

- Create regular reports to keep everyone informed about the impact of AI: good, bad or unintended.

- Explain all updates, limitations and improvements in simple and inclusive language.

- Make sure these reports are easy to access, even for a non-specialist audience.

- Clear explanations and accountability

- Make sure the AI can explain its decisions in a way that people can understand.

- Keep records showing why the AI made certain choices, including the factors that influenced it.

- Clearly identify who is responsible for ensuring that AI meets ethical and security standards.

- Continuous monitoring for security

- Regularly verify that AI is working as expected and safely.

- Include ways to disable the AI or replace it if it starts causing problems.

- Test the AI to make sure it works well in different real-world situations.

- Positive social and environmental impact

- Think about how AI could affect society and the environment.

- Ensure AI aligns with organizational values and has a positive impact on the world.

The Future of AI for Good: Trends and Innovations

When it comes to AI for good trends, we have observed the following:

- Using no-code solutions – platforms like Bubble or AppGyver allow non-tech experts to create AI-based applications for social good (they also reduce the costs of AI projects). For example, an NGO could use a no-code solution to automate volunteer coordination or donation tracking.

- AI for environmental and social governance (ESG) – AI could also prove useful for monitoring environmental impact, such as tracking deforestation using satellite imagery, or for improving social protection programs by analyzing community needs.

- AI and human decision making – AI plays an essential role in decision-making. For example, it could help evaluate crisis data. However, he should never be considered the ultimate decision-maker. Human involvement is and will always be essential to guarantee compliance with ethical considerations.

AI for Good: Balancing the Power of Technology and Ethics

Our Tech to the Rescue initiative at Netguru is living proof that companies can not only develop commercial AI products, but can also work towards creating solutions that can benefit the world at large.

We can all contribute to global struggles – whether it’s reducing emissions, providing access to education or minimizing the impact of natural disasters. Those who develop AI have the means to have a particularly positive impact on the world.